Beyond the Tree: Building an AI-Ready Taxonomy for Millions of SKUs

It usually starts with a spreadsheet.

It's Monday morning. A catalog lead opens a fresh file from a key supplier: another revision of their product data. The layout looks familiar, but not identical. A few headers are renamed, some categories are consolidated, there's a new column for "applications."

The team knows the routine. They compare this version with the last one, work out how the supplier's categories map into internal ones, and decide what needs to change in the PIM or ERP so the website and quoting tools stay consistent.

By mid-morning they're checking which SKUs have shifted between vendor categories, matching new labels to existing branches in the internal hierarchy, tweaking titles or attributes so they still make sense in context, and escalating edge cases to a product manager or sales engineer.

For teams responsible for millions of SKUs and many suppliers, this isn't an exception. It's the job. Supplier schemas drift. The same product family might be described three different ways over the course of a year. Even within a single vendor, naming, attributes, and categories aren't static.

In our last piece on catalog operations, we looked at this from the pipeline side: how AI can help ingest, normalize, and validate supplier data so it's usable downstream.

This time, I want to focus on the structure all of that work is trying to preserve.

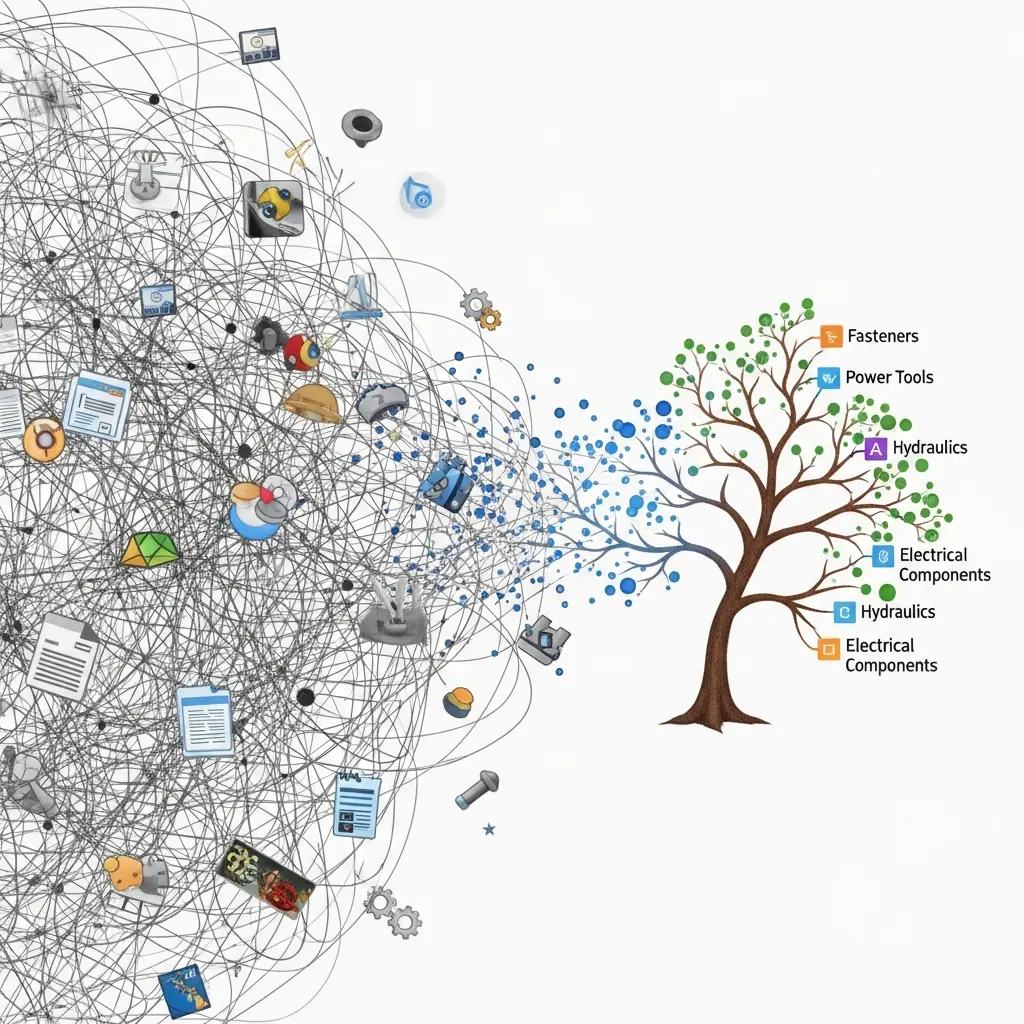

Beneath the spreadsheets, workflows, and review queues, there's something quieter that decides how hard this is, and how far AI can actually help: the taxonomy.

Taxonomy as Infrastructure

On the surface, taxonomy looks like a back-office concern: a tree of categories and subcategories that only a few people touch.

In reality, it behaves like infrastructure.

A product taxonomy underpins most of the key workflows around complex, technical products: how customers and reps move from a general need (say, a fire-rated exit device for a hospital corridor) to a focused set of product families; how unstructured requests (emails, drawings, spec sheets) get translated into SKUs and valid alternates; how the organization encodes what can safely substitute for what and under which conditions; and how the business understands performance by product family, application, or segment.

When the taxonomy is coherent, supplier data has a predictable place to land, search and filters behave in ways that match how customers think, and new product lines can be slotted in without inventing one-off structures.

When it isn't, catalog teams spend significant time reconciling supplier language with inconsistent internal categories, inside sales and product managers rely on personal spreadsheets and mental maps instead of the system, and some product families are effectively hidden, not because the data is missing, but because the structure doesn't reflect how people look for them.

This matters for AI as well.

In catalog operations, AI can help parse files, align attributes, and flag anomalies. But if the underlying taxonomy is fragmented, models are working around a structural problem: similar products are scattered under different branches; important distinctions like function, application, and compatibility are encoded differently (or not at all) across categories; and there's no stable, machine-readable map of the product universe to reason over.

To move from AI that merely copes with messy data to AI that consistently supports search, quoting, and replacement decisions, you need that map: a taxonomy that reflects how your customers and internal teams actually talk about products, a hierarchy and attribute model that makes sense to machines and humans, and a way to keep that structure aligned as millions of SKUs and ongoing supplier updates flow through.

The problem is that most industrial taxonomies weren't built with any of this in mind.

How We Ended Up Here

Most industrial taxonomies were never really "designed." They grew.

The starting point was usually an ERP or PIM implementation. Someone needed a way to group products for pricing, reporting, and basic navigation, so a simple hierarchy was created. Over time, that hierarchy absorbed new realities: new product lines and services, acquisitions and brand consolidations, custom structures requested by large customers or specific channels.

Each change made sense in the moment. A new branch here, a catch-all category there, a one-off level inserted to support a key account. Over ten or twenty years, the result is a tree that reflects every past decision, not today's strategy.

Suppliers add another layer. Many distributors and manufacturers adopted vendor terminology directly to "move faster" when onboarding new lines. Those choices accumulate. Similar products end up described with different family names in different parts of the tree. The same function might be represented under multiple L2 or L3 categories depending on which vendor came first. Attributes are encoded inconsistently across branches, even for closely related products.

For teams managing millions of SKUs, this legacy structure becomes a constraint. Everyone can sense it's not quite right, but it's deeply wired into pricing, reporting, integrations, and habit. Changing it feels risky, so adjustments stay local and incremental.

The net effect is a taxonomy that works well enough to keep the business running, but not well enough to support the level of automation and AI that leadership is now asking for.

Why Big Taxonomy Projects Stall Out

Most attempts to "fix" taxonomy follow a familiar pattern.

A cross-functional team forms: catalog, product management, eCommerce, sometimes IT. They export the current tree into spreadsheets and start designing a better version: combining overlapping categories, introducing new levels where things feel overloaded, and renaming branches to better match how customers talk.

On a small catalog, this can work. On a catalog with millions of SKUs and hundreds of suppliers, three problems show up quickly.

First, it depends on scarce experts. The people who understand the full picture (product managers, senior catalog staff, experienced sales engineers) also have full-time jobs. They can weigh in on the hardest questions, but they can't manually audit every category, attribute, and title.

Second, it doesn't stay current. Even if you "clean up" the taxonomy once, suppliers keep changing schemas and new lines keep coming in. Without a way to continuously align new data to the updated structure, drift returns quickly.

Third, the content work doesn't scale. Structural changes imply content changes: naming conventions, clearer titles, standardized descriptions. Doing that SKU by SKU is slow and hard to maintain.

Because of this, many organizations scope their efforts narrowly, one brand or one branch, while the rest of the catalog stays as-is. You end up with pockets of well-structured families surrounded by older, inconsistent branches. AI can help around the edges, but it's still operating on uneven ground.

What's changed in the last few years is that AI can now handle most of the repetitive analysis and content generation that used to make taxonomy work feel unmanageable.

The opportunity is to redesign the process.

Designing for an AI-Led Taxonomy

The core shift is simple: instead of asking humans to manually inspect and re-label the entire tree, you design the taxonomy so that models can do most of the heavy lifting, while people focus on direction, review, and exceptions.

A few principles matter here.

Customer-first language

Start from how engineers, spec writers, and inside sales describe problems and products. That language becomes the reference for how categories are named and differentiated, so search, navigation, and RFQs all point to the same conceptual map.

Evidence-based structure

Use real signals, not just internal opinions, to inform the shape of the tree. Product data across suppliers, search queries and click paths, RFQ and quoting patterns, and support questions all tell you how people actually view and use your catalog. Models are good at reading these at scale and surfacing patterns: which terms cluster together, which products are treated as interchangeable, where customers seem confused.

Stable backbone, flexible edges

Treat the upper levels (L1/L2) as long-lived domains that rarely change, and deeper levels (L3/L4 and attributes) as deliberately flexible. That gives you a stable anchor for reporting and systems, while leaving room for AI to suggest splits, merges, and attribute adjustments without breaking anything.

Automation-aware conventions

Define naming and attribute rules so models can apply them consistently. Set clear patterns for titles and short descriptions, decide which distinctions belong in the category versus in attributes, and standardize how you encode function, form, and application. Once you do this, taxonomy stops being a one-off redesign exercise and becomes something AI can help manage continuously.

What It Looks Like in Practice

In practice, an AI-led, human-governed taxonomy process has three loops: analyze, generate, review.

In the analyze loop, models scan the catalog instead of sampling a few branches. For each leaf category, AI can look at product content (titles, descriptions, key attributes) alongside volume and performance metrics like SKUs, traffic, revenue, and margin, and behavioral signals such as common search terms, RFQ language, and support questions. From that, it can flag categories that are overloaded with too many distinct product families in one bucket, redundant with other categories that describe essentially the same thing, or orphans with low volume and weak signals that might be merged elsewhere. The output isn't "here is your new taxonomy." It's a scored map of where the current structure is helping or hurting.

In the generate loop, once problem areas are identified, AI proposes concrete changes. It suggests clearer category names that use customer language but stay consistent with brand and product strategy. It can recommend splits or merges based on how products and queries actually cluster, and it can generate standardized titles and short descriptions that follow the patterns you've defined. This is where a lot of work that used to sit with copywriters and catalog specialists shifts to models. You define the rules and examples; AI applies them across thousands or millions of records.

In the review loop, human experts still matter, but their role changes. Instead of hand-editing every category and SKU, they review structural changes in branches that matter most for revenue, margin, or risk; refine naming in areas where nuance matters: regulatory, safety, or high-stakes fitment; and set and adjust guardrails such as when to introduce a new category versus an attribute, and which domains are off-limits to automated changes. Most of this can happen in a workflow where AI presents proposed changes with the data behind them, and experts approve, modify, or reject them in batches.

The result is not a perfectly static tree. It's a taxonomy that can evolve continuously as new products, vendors, and behaviors emerge without requiring a standing army to keep it upright.

Vendor Data as Fuel, Not Just a Headache

In our catalog-operations work, vendor data onboarding shows up as its own problem: different formats, shifting schemas, and inconsistent attribute models for broadly similar products.

For taxonomy, that same work becomes a useful signal.

As AI ingests and normalizes supplier files, it also learns how the industry describes product families: which terms vendors use for the same underlying function, how attributes are grouped or separated across brands, and where vendor structures align or clash with your internal view.

Instead of treating this as noise to be cleaned up and forgotten, you can feed it back into taxonomy decisions. It can highlight where your internal structure is missing an important distinction that multiple vendors agree on, show where you've over-segmented compared with how products are actually defined in the market, and reveal cases where the same concept shows up three different ways because of historical onboarding choices.

The goal isn't to copy vendor taxonomies. It's to use vendor language and structure as another piece of evidence in a customer-first, automation-aware taxonomy. Once you've agreed on that internal structure, models can keep mapping new vendor data into it, applying the same rules and guardrails, rather than starting from scratch for each new feed or schema revision.

Taxonomy as a Living System

With an AI-ready taxonomy and an AI-led process in place, the work shifts from big, infrequent projects to ongoing, manageable change.

In that steady state, new products and vendors are mapped using learned rules, with AI flagging only edge cases for human review. Structural drift is detected early because models monitor where products, queries, and categories are moving out of alignment. Content consistency (titles, short descriptions, key attributes) is maintained by shared rules, not one-off edits.

Governance doesn't go away, but it looks different. Reviews focus on the highest-impact branches, not the entire tree. Changes are made with clear visibility into the data behind them: how products cluster, how customers search, how vendors describe the same family. Updates can be rolled out in slices, measured, and adjusted instead of relying on a single big-bang redesign.

This is what turns taxonomy into a foundation for more capable AI, rather than a constraint. Search and recommendation models can rely on a stable set of concepts and relationships. Quoting workflows can map unstructured requests onto a structure that reflects how the business actually sells. Replacement and compatibility logic has a clearer place to live than someone's private spreadsheet.

Where This Goes Next

Even with a solid taxonomy in place, there are important open questions.

A few areas we're exploring with customers:

-

Relationships beyond categories. Using the same AI-led approach to model compatibility, assemblies, replacements, and common configurations on top of the taxonomy.

-

Autonomy versus control. Deciding which kinds of changes AI should be allowed to make automatically, and which should always require approval, especially in regulated or safety-critical domains.

-

Cross-domain consistency. Ensuring that the way products are organized for search, quoting, and replacement is consistent enough that AI and humans are working from the same mental model.

Increasingly, I think of taxonomy as one piece of a broader product knowledge layer: the structure, relationships, and rules that let AI act more like a colleague and less like a search box.

If You're Sitting on Millions of SKUs

If you're responsible for a catalog with millions of SKUs, fragmented supplier data, and a taxonomy that no longer reflects how customers buy, you're not alone.

The pattern is familiar. The catalog works well enough to keep the business moving, and everyone can point to places where the structure makes their job harder than it needs to be. AI is on the roadmap, but it's not obvious how to connect ambitious use cases to the reality of today's data.

The good news is you don't need a massive, one-time taxonomy project to move forward. With the right principles and tooling, AI can take on most of the analysis and content work, while your experts focus on the decisions that actually require judgment.

Taxonomy is one part of a broader shift in how product data is managed and used. At Conversant, we're working with teams who want AI to participate meaningfully in catalog operations, quoting, and technical support, not just sit on top as another search bar. If you want to stress-test your current taxonomy or explore how an AI-led, human-governed approach could fit into that broader agenda, we'd be glad to compare notes.